Led by companies like Glean (enterprise search), EvenUp (legal), and Typeface* (content creation), the first AI-native enterprise apps broke from the pack, distinguishing themselves from the wave of AI-native applications that emerged last year following the rise of OpenAI and Anthropic*.

These trailblazers look and feel different from the rest of the pack, many of which are still working to find product-market fit. By leveraging generative AI, they’ve unlocked markets long dominated by legacy incumbents. They’ve turned services into software, rewritten core workflows, and introduced novel architectural approaches that innovate on top of the foundation model.

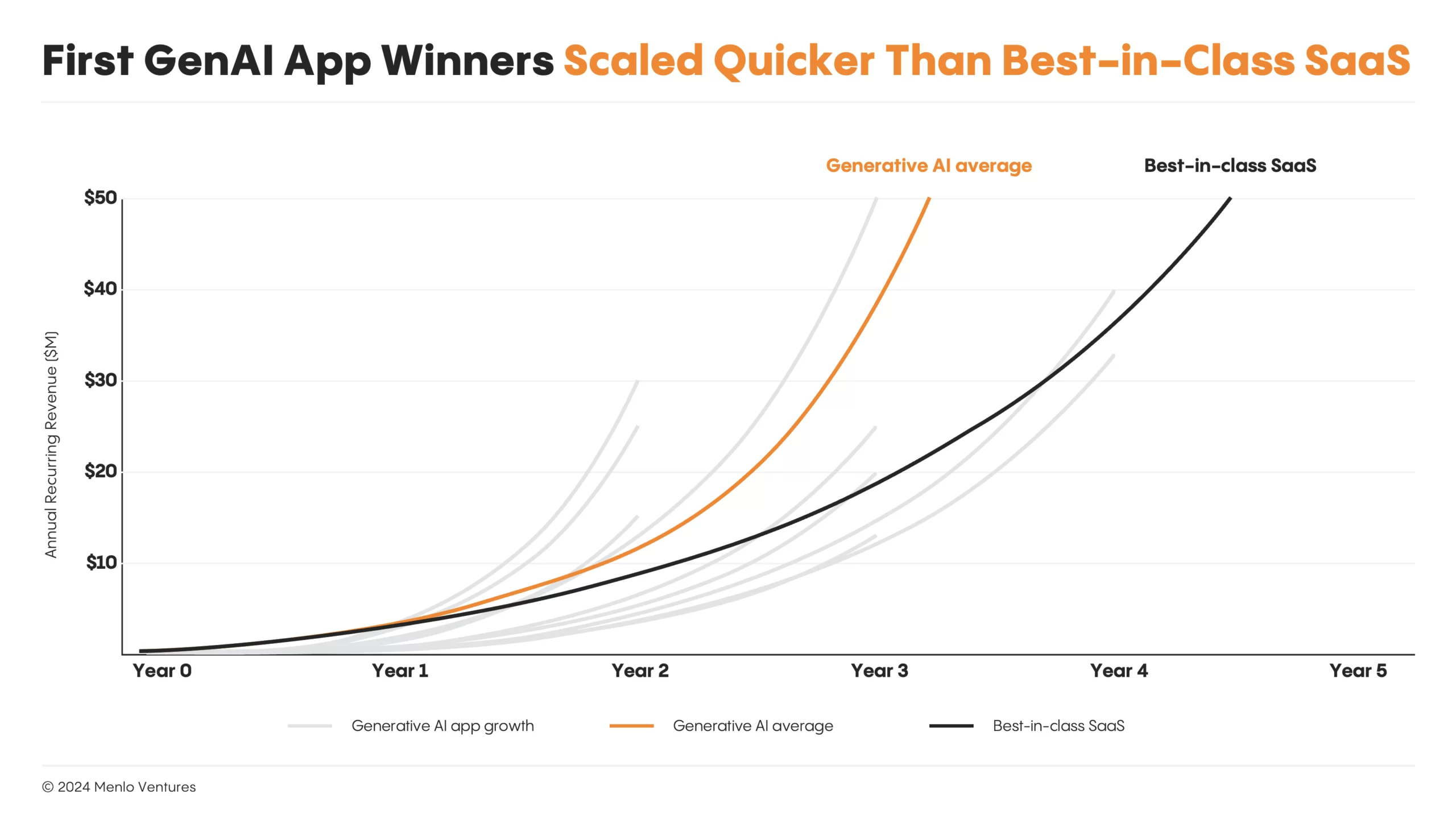

As a group, these companies grew faster than anything we’ve ever seen in the history of SaaS. The chart below illustrates the growth curves of 10 of these early winners, whose rapid ascents blew past all prior measures of outer potential. We studied these early winners and distilled the key strategies that made them successful in a new playbook designed to guide the next wave of AI-native enterprise apps.

Menlo’s Seven Golden Rules

1. Displace services with software

Software may be eating the world, but not as fast as you’d think. Nearly 80% of the economy is service-based, largely untouched by technology due to the abundance of unstructured data and complex reasoning requirements. Generative AI is now making inroads into these previously underserved sectors, turning SaaS on its head with “Services-as-Software.”

2. Target work that is high-value, high-volume, or facing labor shortages

The roles where AI can have the largest impact are high-value (e.g., software engineers and lawyers), high-volume (e.g., customer service and business development representatives), or in areas with worker shortages (e.g., nurses).

3. Seek pattern-based workflows with high engagement and usage

Most AI apps are copilots that share work with an employee, while some are agents that can automate tasks entirely. Either way, a great place to seek a moat for AI apps is pattern-based workflows with regular usage and habitual engagement, rather than those that rely on data or network effects alone.

4. Unlock proprietary data

Over 80% of enterprise data is inaccessible because it’s trapped in unstructured file formats or document stores like your email inbox. By leveraging LLMs to make this data usable, AI-native apps can build proprietary data moats over time.

5. Embrace zero marginal cost creation

Generative AI enables infinite scaling of written content, images, and potentially even software and products, unleashing creation possibilities across the enterprise—from cold sales outbounds to personalized marketing to compelling visual assets.

6. Build where incumbents aren’t, can’t, or won’t

Identify areas where GenAI can unlock net-new capabilities, incumbents are slow-moving, or the dominant player fails to imagine what is possible with AI.

7. Win with compound AI systems rather than models

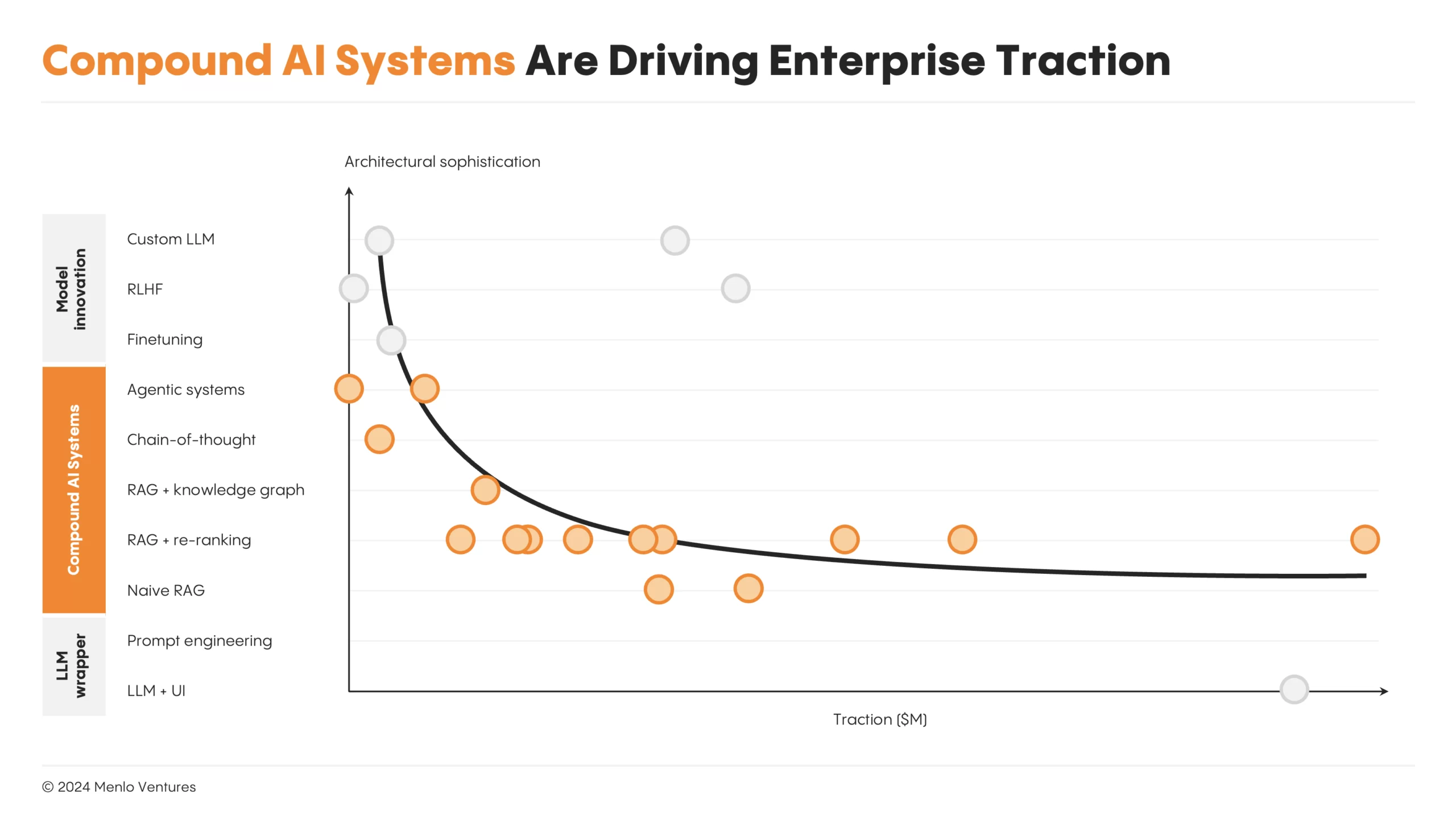

The most successful AI apps focus on adding value at the data and infrastructure level; they win through novel architectural approaches, including chain-of-thought, tool use, agents, and appropriate model choices for the function at hand (versus relying on a single monolithic model).

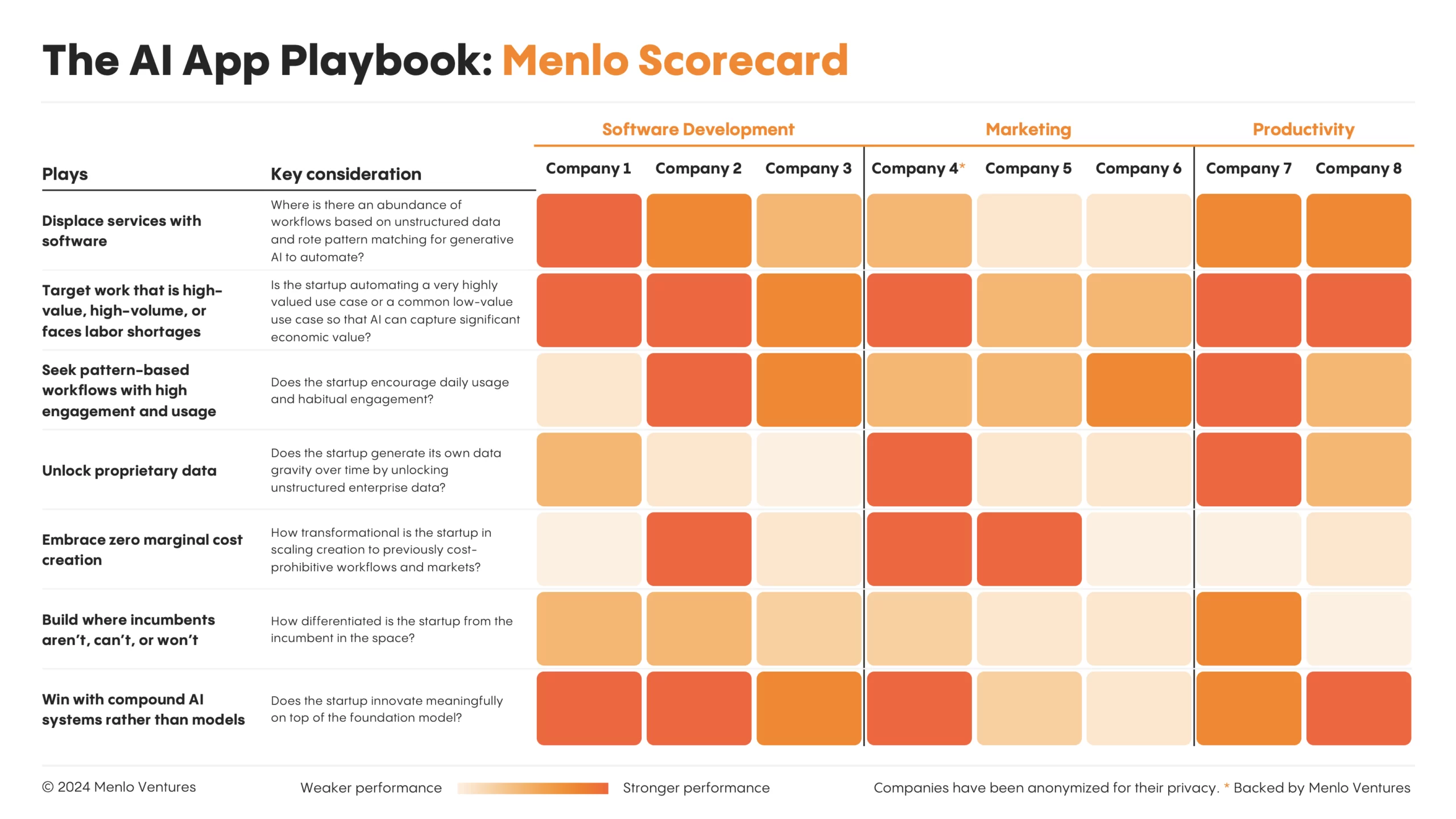

The chart below shows how we scored nine of the leading apps (anonymized) against Menlo’s AI app playbook. In the following section, we’ll provide more detail on each play and share real-world stories of how top performers have used these strategies to win.

Case Studies from the First Generation of AI-Native Enterprise Apps

Case study #1: Co:Helm displaces services with software

The biggest apps are created in the largest markets, so it seems logical that startups should focus on departments or verticals with substantial software budgets. But this view underestimates the far larger opportunity for generative AI in automating professional services, which, again, comprise 80% of today’s economy.

Co:Helm is a startup that targeted the opportunity in services. For years, getting pre-approval from health insurance companies for patient care (known as prior authorization) was considered too niche to generate venture outcomes because software was unable to replicate or replace the human judgment required to apply payer rules to each medical case. By building with LLMs, Co:Helm developed an AI “clinical brain” that serves as a copilot for nurses performing this manual work, allowing the company to expand beyond the $1 billion spent on software in this market and target the $15 billion allocated for professional services.

Case study #2: Abridge targets high-value work, Observe.AI targets high-volume work

A clear indicator of economic value lies in how much companies will pay to have the job done manually—either by a few well-compensated professionals or many lower-paid workers. At the high end of the market, Abridge creates value by enabling highly paid doctors to offload low-level administrative work like note-taking to spend more time treating patients. On the low end of the market, Observe.AI* makes the contact center more productive by automating much of the high-volume work that customer service reps perform and gives them more time to focus on higher-value-added issues.

Case study #3: Eve creates moats through daily usage

There are three ways enterprise applications can create lasting value: (1) store data for a critical business function (becoming the system of record), (2) serve as the platform where daily work happens, or (3) enable collaboration and drive network effects. Whether as copilots or more autonomous AI agents, the most natural entry point for many AI apps will be option 2. Their first moat will come from habitual engagement and optimizing those predictable workflows rather than data or network effects, where incumbents have more of an advantage.

Consider Eve*, an app that automates case intake and legal research—the labor-intensive but important work of gathering information and identifying key patterns in legal cases. Although the AI solution has no initial data or network advantage over the legal databases on which it sits, the startup’s initial enterprise gravity comes from the daily engagement occurring on its platform. By redirecting legal workflows through its own platform, Eve eliminates the need for lawyers to search through dense stacks of legal precedents and becomes a critical tool for lawyers to interact with day to day.

Case study #4: Eleos unlocks proprietary data

AI-native apps can create a data moat by utilizing unstructured, proprietary data that incumbents don’t leverage or can’t access. For example, Eleos* is an ambient scribe that documents patient-therapist conversations during visits and makes the unstructured clinical notes available for session intelligence and coaching later. In doing so, Eleos unlocked previously inaccessible data and built a proprietary data moat (and, in fact, the world’s largest dataset of behavioral health conversation data). In contrast, therapists before Eleos often incompletely documented patient encounters, and the data that did make it into electronic health records (EHRs) remained siloed and unactionable for further analysis, such as diagnosis, due to its unstructured nature.

Case study #5: Typeface embraces zero marginal cost creation

Enterprise content creation is notoriously resource-intensive. Putting together a blog post might take a team of account executives, marketers, and designers a week; writing an outbound sales email could take an hour. LLMs bring this cost to zero, increasing content production and unlocking new opportunities to leverage content across the organization. Typeface*, a platform for personalized content generation, exploits the power of generative AI to unlock new opportunities for creating branded assets across the enterprise. For example, it’s now fast and easy for HR to create content for everything from job descriptions to benefit questionnaires to employee newsletters without leaning heavily on other departments.

Case study #6: Sana builds where incumbents aren’t, SmarterDx builds where incumbents can’t, HeyGen builds where incumbents won’t

Instead of going head-to-head in sectors where an incumbent holds the advantage, many of the first AI-native applications won by identifying sectors where:

The incumbent is weak or nonexistent. Sana* pioneered semantic search as a new category unlocked by advancements in AI. Because this was impossible before the advent of vector retrieval, Sana built in a new market where no incumbent existed.

The incumbent is incapable of moving fast. SmarterDx competes with legacy clinical documentation integrity solutions like 3M, a company founded in 1902 and whose frustrating product gaps in identifying “upcoding” opportunities created an opportunity for new AI solutions.

The incumbent underestimates the size of a new market. HeyGen found an initial wedge in AI avatars for sales outreach, product marketing, and learning and development that Adobe’s video editing tools don’t serve today. Within the context of Adobe’s $19 billion business, HeyGen’s workflow is negligible—yet the numbers speak for themselves: Revenue for the AI avatar category is rapidly approaching $100 million.

Case study #7: EvenUp wins with compound AI systems rather than models

Instead of trying to build better foundation models, most of today’s winners succeed through compound AI systems—systems that use multiple interacting components, including multiple calls to models, retrievers, or external tools. Architectural approaches include RAG, chain-of-thought, tool use, and agents. EvenUp is an example from the legal sector. The company’s architecture distinguishes itself via domain-specific prompting, indexing, reranking, and search and retrieval—which has proven durable against competitors that have taken a more model-based approach of custom legal LLMs for each enterprise customer.

As the chart below illustrates, most first-wave winners succeeded through compound AI systems that enabled them to leverage proprietary data as a key advantage.

How to Apply This Playbook

The first wave of generative AI apps is reshaping the enterprise with new solutions that create tremendous economic value. But this is just the beginning. The seven golden rules that enabled pioneering AI-native applications to break out can serve as a roadmap for the next wave of AI apps and point them to the largest opportunity areas.

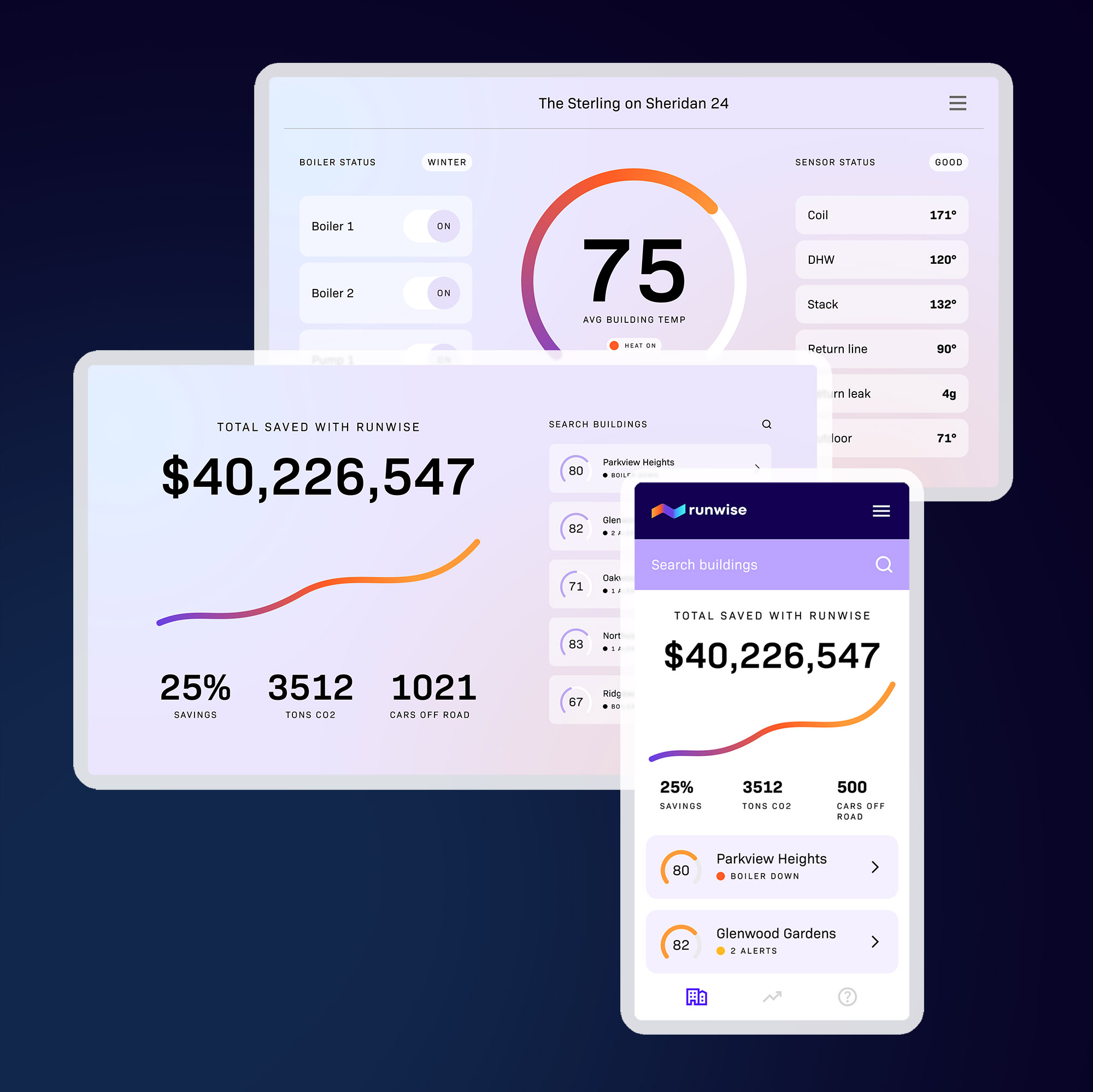

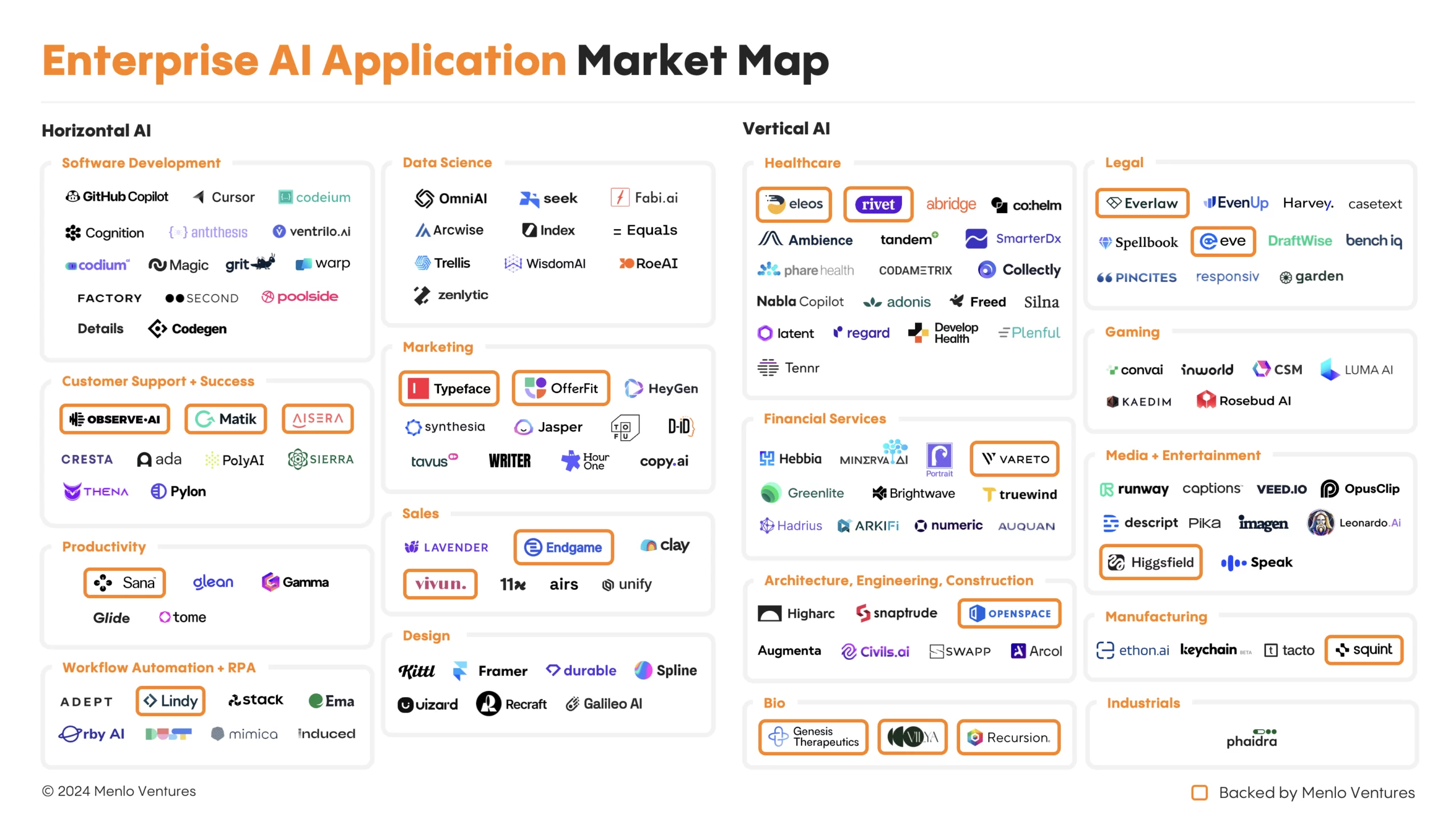

At Menlo, we couldn’t be more excited about the transformation AI will drive across the horizontal and vertical application markets. We’re off to a fast start with investments that span legal, healthcare, bio, customer support, productivity, and marketing.

Our enterprise AI portfolio already includes world-class applications, including Aisera, Eleos Health, Everlaw, Eve, Genesis Therapeutics, Lindy, Matik, Observe.ai, OfferFit, Recursion, Rivet, Sana Labs, Squint, Typeface, and Vilya—as well as investments in Anthropic, Pinecone, Unstructured, Neon, Cleanlab, Eppo, and Clarifai in the modern AI stack.

But these are early innings and new opportunities will emerge. Our team is committed to finding and working with the best entrepreneurs who are building across generative AI, from the modern AI stack to consumer AI, security for AI, and AI-first SaaS applications. As the market map below shows, the universe here is broad.

If you’re a founder who—like us—believes that AI will disrupt enterprise SaaS, we’d love to hear from you:

Matt Murphy ([email protected])

Steve Sloane ([email protected])

Derek Xiao ([email protected])

*Backed by Menlo Ventures

1. “Triple, triple, double, double, double,” describes a best-in-class growth trajectory: two years of tripling a company’s annualized revenue growth, followed by three years of doubling it.

Matt is a partner at Menlo Ventures and invests multi-stage across AI infrastructure (DevOps, data stack, middleware, API platforms), AI-first SaaS (vertical and horizontal), and robotics. Since joining Menlo in 2015, Matt has led investments in Anthropic, Airbase, Alloy.AI, Benchling, 6 River Systems (acquired by Shopify), Canvas, Clarifai, Cleanlab, Carta,…

Steve is a partner at Menlo focused on investments in Menlo’s Inflection Fund, which targets fast-growing Series B/C companies. He specializes in AI-powered vertical SaaS investments and supply chain technology, including Enable, Eleos, Observe.AI, Scout, 6 River Systems, ShipBob, CloudTrucks, and Parade. Steve joined the firm in 2015 as an…

As an investor at Menlo Ventures, Derek concentrates on identifying investment opportunities across the firm’s thesis areas, covering AI, cloud infrastructure, digital health, and enterprise SaaS. Derek is especially focused on investing in soon-to-be breakout companies at the inflection stage. Derek joined Menlo from Bain & Company where he worked…