The enterprise AI arms race is on. Powerful foundation models like Claude 2 and GPT-4 have made AI deployment mandatory. Enterprise data is the not-so-secret ingredient that allows engineering teams to build AI and analytics products that help them stand out from the competition. But creating trustworthy models (e.g. models that don’t hallucinate wrong outputs and produce reliable, high-accuracy predictions)—and deploying them painlessly—is easier said than done. Quality data is the key to unlocking the value of enterprise AI, and bad data comes at a massive cost: “Garbage in, garbage out” rings true. Without intelligent data quality tooling, the annotation and cleaning process is manual, slow and costly—taking 80% of data scientists’ time.

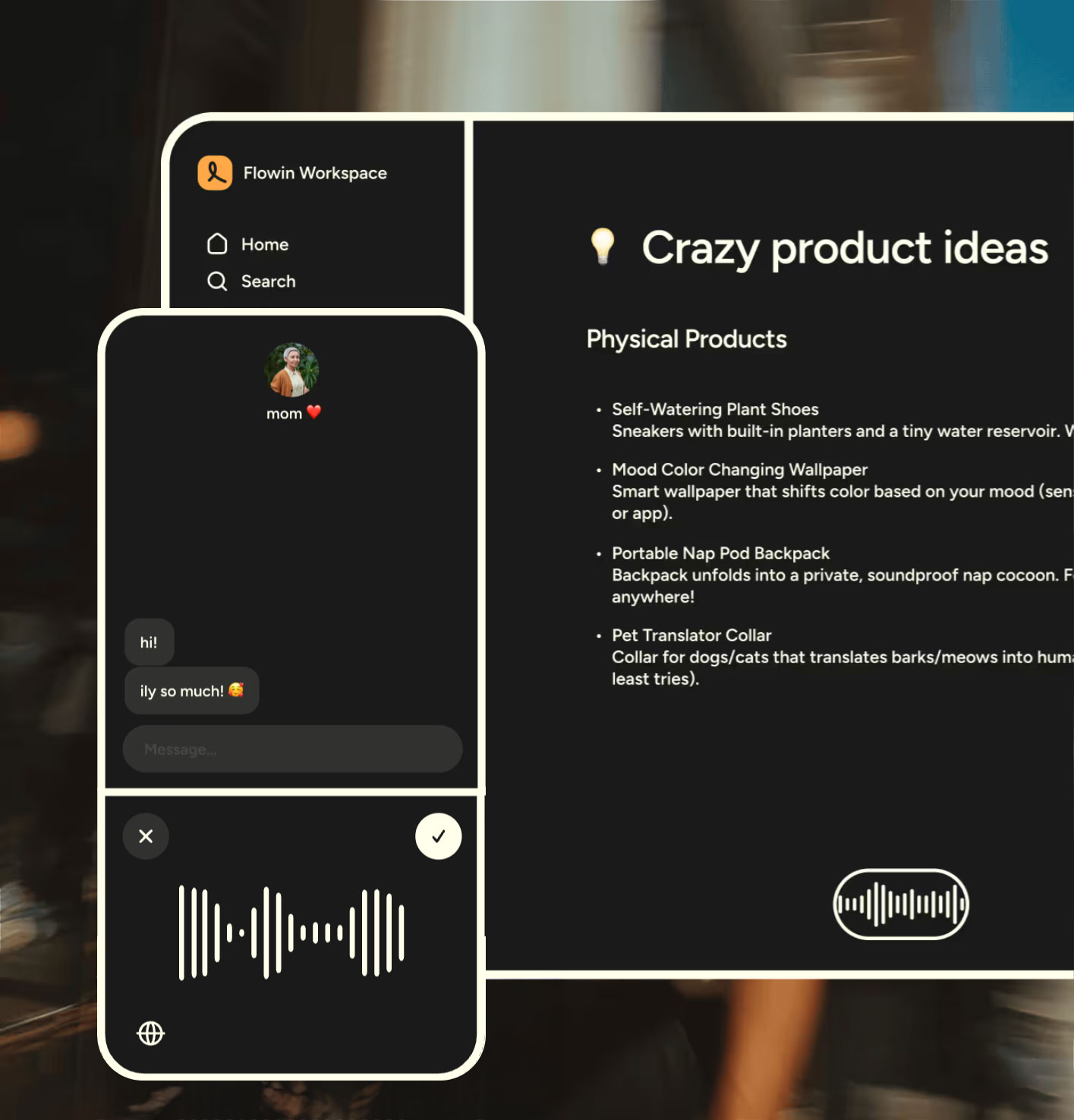

That’s why we are thrilled to partner with the incredible team at Cleanlab, as they make it dead simple for engineering teams to deploy highly accurate AI and analytics systems on their enterprise data. With Cleanlab, AI teams automate weeks of manual data labeling and bypass dozens of engineering hours for model training and deployment. And Cleanlab models train on datasets denoised by AI, so they are remarkably accurate. Cleanlab’s automated data quality solution turns messy, real-world data into state-of-the-art models. Moreover, Cleanlab solutions don’t require enterprise teams to change their current ML and data pipelines—it integrates directly with existing solutions via API calls, making it easy to get started automating the painful part of data and ML ops for enterprises.

The First Data-centric AutoML Platform

While Cleanlab CEO Curtis Northcutt was pursuing his CS PhD at MIT, he made a startling discovery: 6% of the ImageNet validation dataset—widely considered to be the gold standard of accuracy for computer vision—was mislabeled. He saw firsthand the cascading effect of these label errors and set out to build an automated way to fix them. The result? Cleanlab: an open-core data curation platform that leverages a model’s own intelligence to improve its data. Confident Learning, the subfield of theory and algorithms that Curtis invented at MIT, powers Cleanlab today. Cleanlab algorithms increase model accuracy by 10-30%—a figure that increases as data gets more sparse and noisier, and the algorithms learn and improve as work is done in the system. Cleanlab Studio incorporates and improves generative AI model reliability, creating the most accurate enterprise models on the market that take just a few clicks to train and deploy.

Cleanlab recently added a new product to the Cleanlab Studio platform that solves one of the biggest problems facing LLM enterprise teams and solutions today: hallucinations. LLMs have proven themselves useful in several key markets, but how do you know when you can trust their outputs? Cleanlab’s Trustworthy Language Model (TLM) adds a trustworthiness reliability score to all LLM outputs. TLM extends Cleanlab Studio’s capabilities to add intelligent metadata to help automate reliability and quality assurance for systems that rely on LLM outputs, synthetic data, and generated content. Cleanlab’s Trustworthy Language Model is available to try in Beta today as part of Cleanlab Studio at cleanlab.ai.

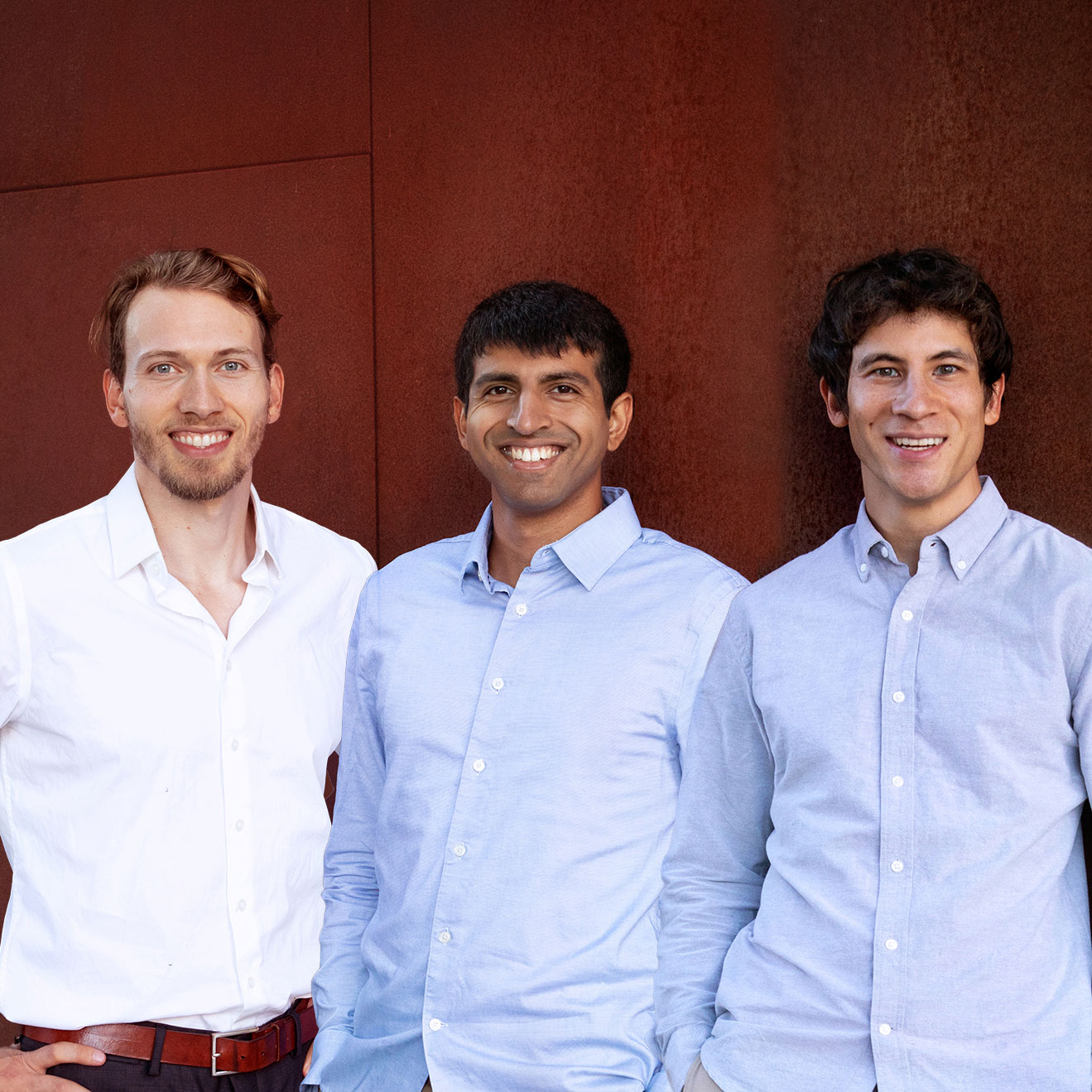

Expert Researchers and Practitioners

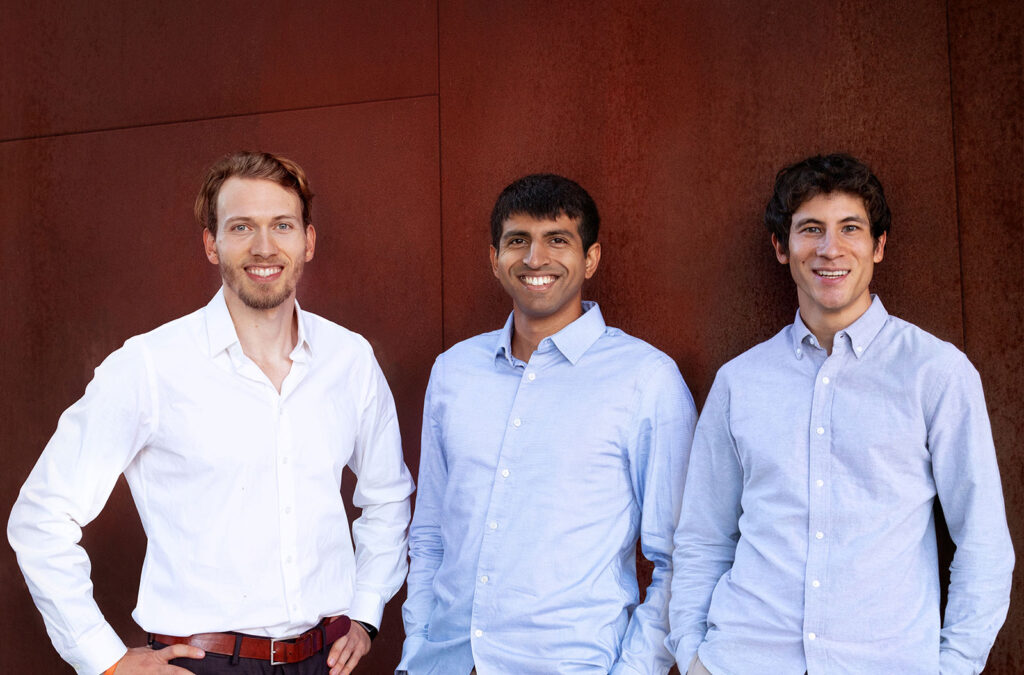

When we bet on Cleanlab, we bet on a team of rockstars. It’s rare to find a founding team that perfectly balances research depth and practical expertise the way Curtis, Anish, and Jonas do. Balance is probably the wrong word—CEO Curtis Northcutt, CTO Anish Athalye, and Chief Scientist Jonas Mueller are off the charts in both dimensions. At MIT, the team published research on hardware security, adversarial ML, and, of course, data-centric AI. The team cut their teeth in the “real world” of hardcore AI engineering. Curtis was the CTO of a company that developed AI models for analyzing customer calls; Anish built ML systems at OpenAI and Google; and Jonas was an applied scientist on AWS Sagemaker, where he created the popular open-source autoML toolkit Autogluon.

Building the Modern AI Stack

We could not be more excited to join forces with Curtis, Anish, and Jonas as they bring data-centric AI to the enterprise. They are already off to an incredible start. Today, over 10% of Fortune 500 companies—including AWS, JP Morgan Chase, Google, Oracle, and Walmart—use Cleanlab. Top AI teams at Hugging Face, Databricks, and Bytedance are also happy users.

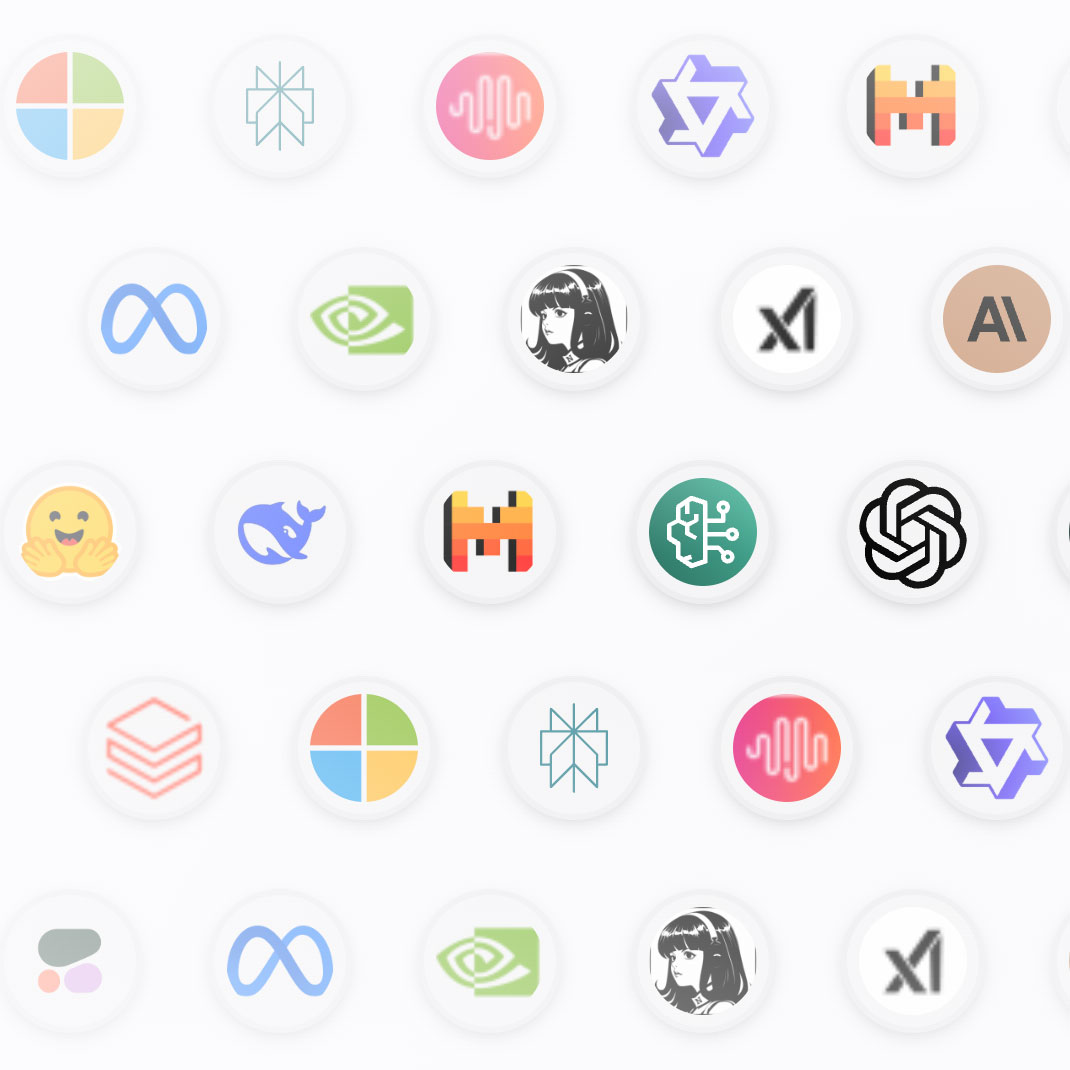

Cleanlab is a perfect fit for Menlo’s modern AI stack thesis and growing AI portfolio. At the foundation model layer, we invested in Anthropic, the developer of Claude 2, a large language model (LLM) designed to be safer than competing models and already championed by some as a potential “ChatGPT killer.” In AI infrastructure we’ve backed Pinecone and Neon at the database layer, TruEra at the AI quality layer, Clarifai at build/deploy layer, and now CleanLab at the data quality layer—check out our enterprise AI infrastructure market map. While picks and shovels are the key enablers that make all this possible, we are also investing at the application layer in startups with a disruptive take on how to use Generative AI such as Typeface, Sana Labs, Lindy, and more to be announced shortly.

As a team, we are going ALL IN on the infrastructure that helps companies stay on the cutting edge of AI, and the transformative teams working hard to accelerate this AI-native future. If you are building in the modern AI stack or have a novel application idea, we would love to hear from you!

Matt is a partner at Menlo Ventures and invests multi-stage across AI infrastructure (DevOps, data stack, middleware, API platforms), AI-first SaaS (vertical and horizontal), and robotics. Since joining Menlo in 2015, Matt has led investments in Anthropic, Airbase, Alloy.AI, Benchling, 6 River Systems (acquired by Shopify), Canvas, Clarifai, Cleanlab, Carta,…