AI-generated video is on the verge of its ChatGPT moment.

In the past year, video generation models have produced stunning demonstrations in short clips that have flooded social media—from fantastical trailers of Star Wars in the style of Wes Anderson to adorable snapshots of puppies playing in the snow.

But if we draw the analogy to large language models, even the most advanced video models today are still in their GPT-2 era. In 2018, GPT-2 could generate brief, coherent paragraphs, but struggled with nuanced ideas and lacked the emergent reasoning capabilities that the next generation of LLMs would unlock.

Similarly, today’s video models can produce videos less than five seconds long with reasonable realism and movement, but fall short when attempting to generate longer, production-grade content, lacking the temporal stability and object permanence crucial for enterprise applications.

That’s where Higgsfield comes in. Today, we’re thrilled to announce Menlo’s partnership with founder Alex Mashrabov and his team, who are pioneering a diffusion transformer architecture that promises to push the boundaries of visual realism, natural movement, and physics simulation. By combining latent diffusion models with transformer architectures and training on vast real-world data, Higgsfield is building one of the first “world models”—models so realistic that they can simulate the physical world, resulting in longer, smoother, and more coherent sequences that rival professionally produced content—ushering video AI into its next era.

Scaling Video With Transformers

Video AI’s inflection point has been decades in the making, tracing back to early image generators like generative adversarial networks (GANs). Stable diffusion models marked the first milestone into the current state of the art, enabling image generation in pixel space. Latent diffusion models further enhanced scalability by taking a similar approach in compressed latent space. Many current video AI models now incorporate time into these latent representations to produce short sequences of motion.

Higgsfield is pushing boundaries further by leveraging transformer architectures, which enable scale for world simulation. Originally used for self-driving cars, Higgsfield repurposes the “world model” combined with diffusion models to render visuals in cinematic quality. The company further enhances steerability and frame-by-frame visual coherence with reinforcement learning to produce smooth, realistic videos that adhere closely to prompts.

Unlocking Studio-Grade Quality

Alex leads a team of world-class AI engineers and product designers, some of whom worked together with him in his previous role as Head of Generative AI at Snap. Together, they’ve already scaled products to billions of users, including MyAI, the world’s second largest consumer AI chatbot used by over 150 million people. In the video generation space, we’ve found Alex to be an N-of-1 founder. He has a strong grasp of both the research roadmap at the frontlines of AI video generation and the go-to-market strategy to capture the economic value created.

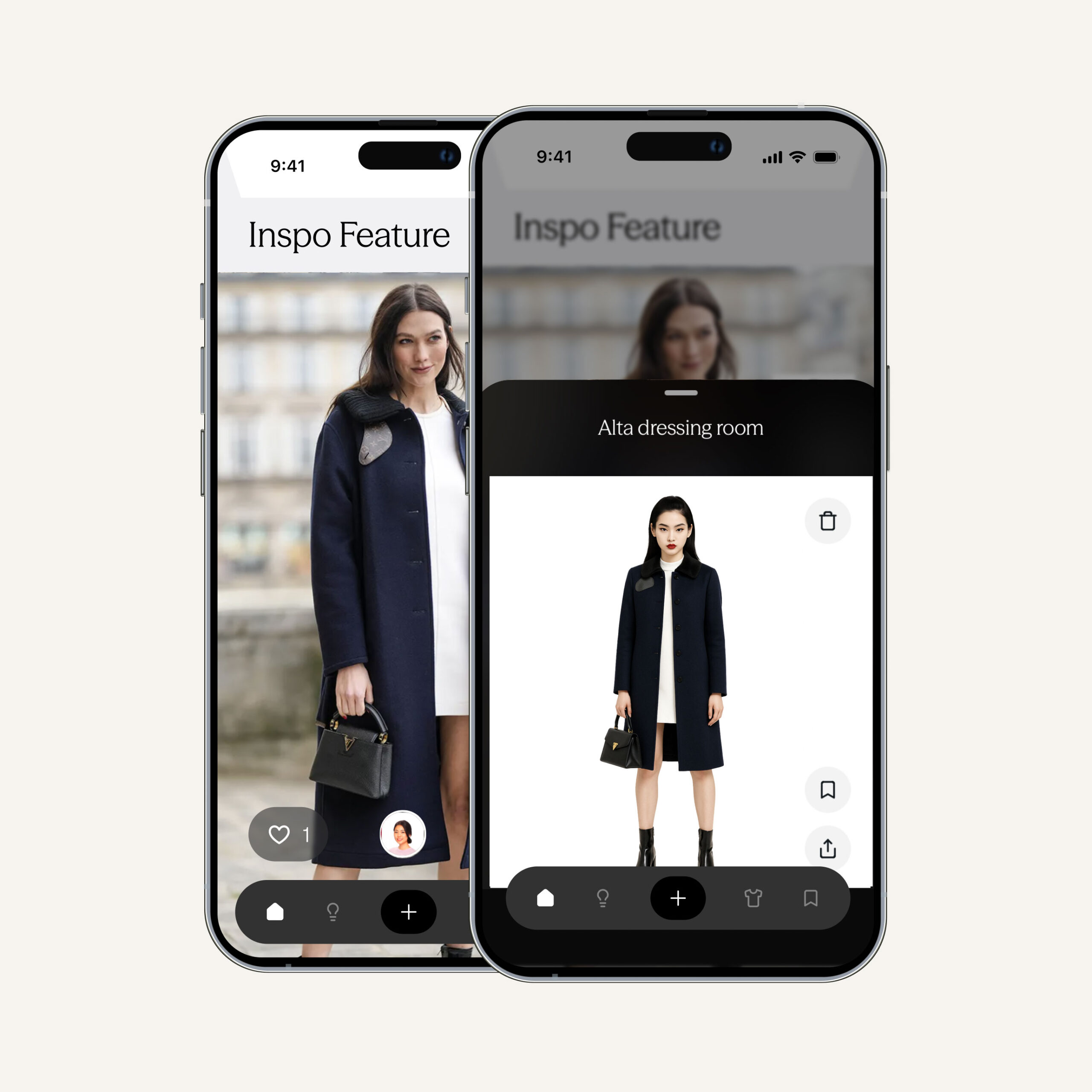

The company’s first product, Diffuse, is an entertainment app launching over the coming weeks that enables users to create and share short videos of themselves and their friends. It offers mobile consumers an unparalleled level of personalization, creative control, and creative fine-tuning. Looking ahead, the company will soon release its first text-to-video model and eventually a studio-grade video marketing platform for both creators and enterprises.

At Menlo, we are thrilled to partner with Higgsfield and support their mission to build the next generation of AI video models. The company joins a deep AI portfolio, which includes other leaders building at the infrastructure and application layers like Anthropic, Pinecone, Unstructured, Neon, and Typeface.

We can’t wait for the world to meet Higgsfield soon. Join the waitlist at https://higgsfield.ai/.

Amy came to Menlo Ventures to grow the firm’s consumer technology and gaming practice and back founders building new products at the forefront of emerging platform shifts. As an investor, Amy seeks founders who share her obsession with products that define how people work, live, and play. She believes that emerging…

As an investor at Menlo Ventures, Derek concentrates on identifying investment opportunities across the firm’s thesis areas, covering AI, cloud infrastructure, digital health, and enterprise SaaS. Derek is especially focused on investing in soon-to-be breakout companies at the inflection stage. Derek joined Menlo from Bain & Company where he worked…