In our July analysis of Security for Agents, we explored the novel vulnerabilities introduced by autonomous AI systems. As agents increasingly make independent decisions, orchestrate multi-step workflows, and interact with external systems, they require fundamentally different security paradigms and an emerging stack of specialized tooling. But there’s a crucial flip side to this equation: Agents for Security. How do AI agents reshape the categories that security incumbents have dominated for decades?

With 4.8 million unfilled cybersecurity positions globally and threat volumes increasing magnitudes faster than human response capabilities, the industry faces an existential scale challenge that only autonomous AI can solve. The math is sobering: Human analysts triage ~50 alerts per day, while AI has accelerated the pace of attack generation by close to 100x. As a result, the time from compromise to exfiltration has dropped to <5 hours for 25% of cases, nearly 3x faster than what it was in 2015.

What we’ve been calling “AI agents” are increasingly functioning as AI employees: autonomous systems with defined roles, reporting structures, and performance metrics, not the copilot assistants that incumbents have layered onto existing dashboards. AI employees represent a workforce transformation reshaping every major security category from EDR to SIEM to network security.

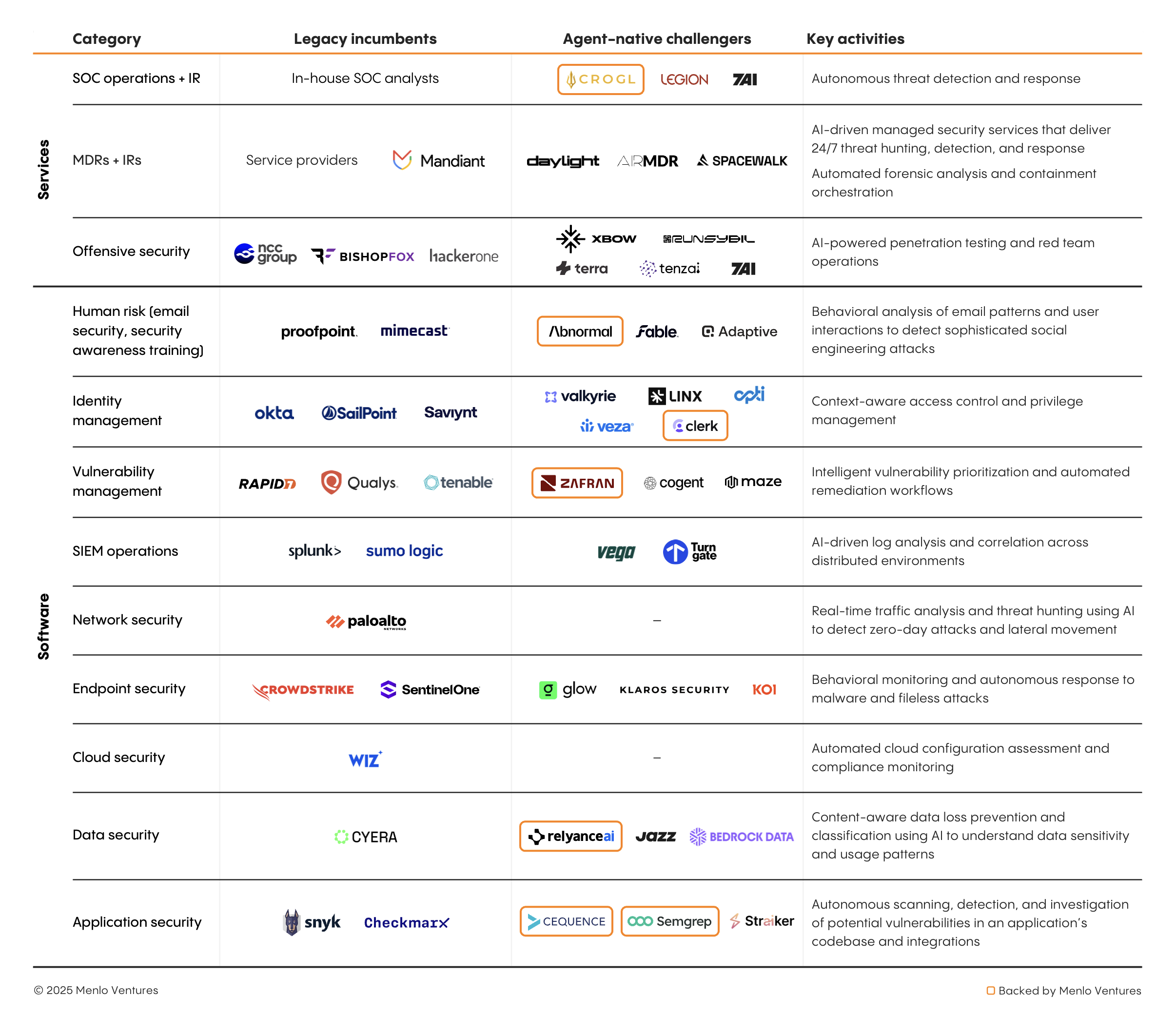

Ushering in the New Guard of Cybersecurity

In the last year, a new wave of ambitious AI-native security startups has emerged to challenge incumbents across every major category. The pattern is clear: The shift from human-scale to autonomous operations is creating openings even in seemingly entrenched segments.

The opportunity for these challengers stems from two converging forces: incumbents struggling to defend against increasingly sophisticated AI-powered threats, and AI agents now capable of operating security functions almost autonomously at enterprise scale.

Cracks in the Foundation

Every major Fortune 500 organization that has suffered a cybersecurity incident in the last five years had best-in-class security suites already installed, often with years of custom implementation work. Yet, the evidence is mounting that even sophisticated security deployments are not sufficient. One in five S&P 500 companies suffered major disruptions due to vendor failures in 2024. Organizations with mature security programs and significant vendor investments still experienced catastrophic failures because their human-centric security models were unable to adapt to the scale and sophistication of modern threats.

While these breaches persist, the macro conditions worsen. The global cybersecurity talent gap hit 4.76 million professionals in 2024, while alert volumes continue to peak. Attack surfaces are exploding as organizations struggle to even inventory what they’re protecting, and threats are becoming increasingly dynamic, leaving static solution providers scrambling to keep pace.

Moreover, the shift to AI-native enterprises will only amplify these vulnerabilities. As organizations deploy thousands of AI employees across their operations, traditional security models will face an exponential increase in non-human identities, autonomous decision-making processes, and machine-to-machine interactions that existing tools simply cannot monitor or govern effectively. AI employees will generate data access patterns that are both unpredictable and high-velocity, requiring security systems that can reason through context and intent in real time rather than relying on predefined rules or historical baselines. Incumbent security vendors, constrained by their legacy architectures and business models, will find themselves trying to protect AI-driven enterprises with fundamentally human-scaled tools. The result will be an even wider gap between the sophistication of AI-powered threats and the capabilities of traditional security defenses, creating unprecedented blind spots that only AI-native security solutions can address.

This was made jarringly clear when Anthropic* recently disrupted what they assessed as the first large-scale cyberattack executed without substantial human intervention. In this instance, a state-sponsored group manipulated Claude Code to autonomously infiltrate roughly 30 global targets, with AI performing 80-90% of the campaign work, making thousands of requests per second at a pace human defenders simply cannot match. Traditional security tools built for human-speed threats are fundamentally mismatched against AI-orchestrated campaigns that compress weeks of reconnaissance, exploit development, and data exfiltration into hours.

The pressure is on. BlackHat and DefCon 2025 centered around a singular theme: AI is fundamentally changing the threat landscape. Yet incumbents face structural inhibitors that make rapid adaptation difficult. For example:

- Product architecture. Legacy SIEM platforms like Splunk were designed for batch processing and human-driven investigations, not real-time autonomous decision-making. Their rule-based detection engines require predefined signatures and manual tuning; fundamentally incompatible with AI employees that need to reason through novel attack patterns and orchestrate dynamic responses across multiple security tools without human intervention.

- Business model conflicts. Incumbent vendors like CrowdStrike and Palo Alto Networks derive the bulk of their revenue from per-seat, per-device licensing models that assume human operators. Shifting to outcome-based pricing (pay-per-threat-stopped or pay-per-incident-resolved) would cannibalize existing revenue streams while requiring wholesale changes to sales methodologies, channel partner economics, and customer success models—a transformation most public companies are reluctant to undertake.

- Implementation inertia. Enterprise security deployments often involve 12- to 24-month implementations through system integrators, with heavy customization for compliance frameworks like SOX, PCI-DSS, and industry-specific regulations. These bespoke configurations create technical debt and vendor lock-in that make migration costly, while their static rule sets and manual approval workflows are antithetical to the dynamic, autonomous operation that AI employees require.

Interestingly, the supposed “data moat” advantage of incumbents becomes questionable when AI employees need real-time context and decision-making capabilities that transcend historical pattern recognition. Having years of security logs may not necessarily translate to autonomous threat response.

Where AI Agents Win

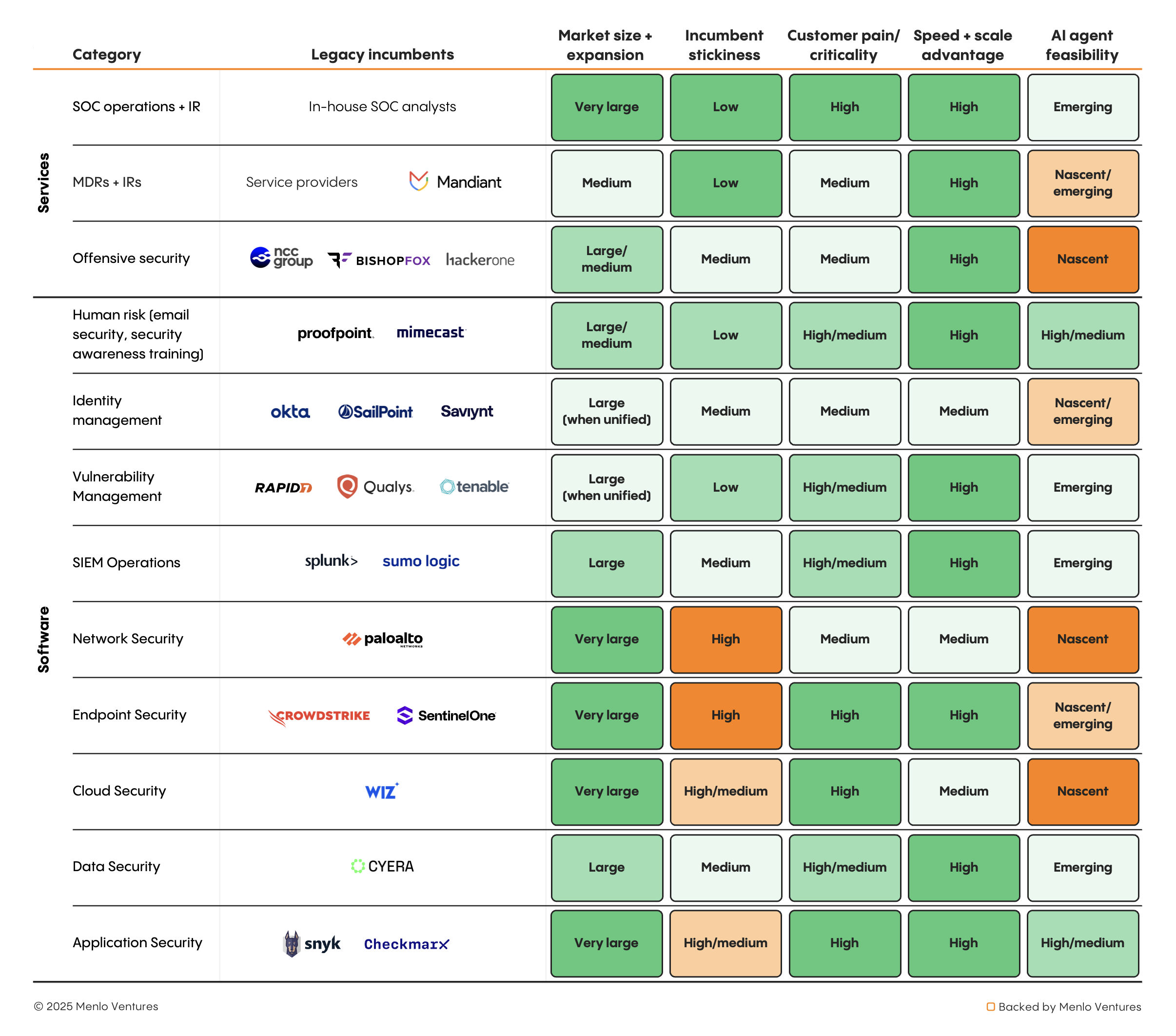

To understand where AI agents will be the most transformative, we take a look at each major security category to assess where they can benefit from agents, and how. Not every security function is equally suited for autonomous AI: Some require nuanced human judgment, while others involve life-or-death decisions where errors are catastrophic. Market dynamics also vary dramatically; while some categories offer clear ROI advantages for AI agents, others face entrenched incumbents with strong switching costs. In order to understand which categories are susceptible to disruption, we can assess both the market’s readiness for agents as well as the ability for agents to materially improve the key activities of the incumbent.

Market Opportunity

- Market size + incumbent vulnerability: How much is spent on human labor in this category?

- Customer pain + ROI clarity: How structurally disadvantaged are incumbents in adopting AI agents? Are customers desperately frustrated with current solutions? Can AI agents demonstrate immediate, measurable ROI?

AI Agent Feasibility

- Speed + scale advantage: Does this category fundamentally benefit from doing things faster/at greater scale than humans can achieve?

- Job function clarity + decision independence: Can this security function be clearly defined, and can an AI agent make consequential decisions without real-time human approval?

- Error tolerance + autonomy: What happens when the AI agent makes a mistake, and can it work independently for extended periods?

What emerges is a spectrum, from categories where AI agents are already demonstrating clear value to those where technical or organizational barriers remain substantial. Notably, service-oriented categories like SOC operations and offensive security show particularly strong fundamentals, as they replace expensive human labor with minimal switching costs. These dynamics are already playing out in several categories where AI-native startups are gaining significant traction.

Diving Deep: Categories Under Pressure

The above framework identifies where disruption is possible, revealing categories where AI agents deliver both technical superiority and clear economic value. Several categories show these forces already at play.

SOC Operations

Security operations centers burn through analysts faster than any other cybersecurity role, with Tier 1 analysts handling repetitive alert triage that’s perfectly suited for AI automation. Companies like Crogl* are building toward fully autonomous SOCs, where AI agents handle everything from initial alert investigation to threat containment, promising to solve the SOC staffing crisis while dramatically reducing response times.

SIEM and SIEM Operations

Security information and event management represents one of the most human-intensive categories in enterprise security, requiring dedicated teams to write detection rules, tune alert thresholds, and investigate incidents across massive data volumes. Legacy SIEM platforms like Splunk and IBM QRadar were architected for batch processing and manual investigation workflows that fundamentally don’t scale with modern threat volumes. Vega is building an AI-native SIEM that autonomously correlates security events, writes and optimizes detection logic, and conducts initial incident investigation without human intervention, transforming SIEM from a human-intensive platform into an autonomous threat detection and response engine.

Endpoint Security

Traditional endpoint detection and response (EDR) platforms from CrowdStrike, SentinelOne, and Microsoft Defender generate massive volumes of alerts that require human analysts to investigate potential threats and determine appropriate responses. While these platforms excel at detection, they struggle with the autonomous decision-making required to differentiate between sophisticated attacks and benign system behavior across hundreds of thousands of endpoints. The category is ripe for AI transformation because endpoint security fundamentally benefits from real-time, contextual analysis at machine speed—something human analysts simply cannot provide at enterprise scale. Companies like Koi and Glow are addressing a critical blind spot that traditional EDR platforms miss: the non-binary software layer of packages, browser extensions, AI models, and containers that legacy tools were never built to see or control. Koi’s AI-powered risk engine analyzes code and threat indicators to provide preventative governance before software executes on endpoints, demonstrating how AI can extend protection beyond what rule-based systems can achieve.

Offensive Security/Pentesting

Penetration testing remains one of cybersecurity’s most artisanal practices, with manual testing cycles taking weeks to complete assessments that AI agents could execute continuously. Incumbent providers like BishopFox’s penetration testing services and traditional consulting firms are structurally unable to offer the speed and coverage that autonomous agents provide. Many companies have flocked to the market—players like Runsybil, XBOW, Terra Security, and Tenzai are pioneering AI-native offensive security platforms that can conduct comprehensive vulnerability assessments, simulate attack scenarios, and provide continuous red team exercises at machine speed. The value proposition is compelling: transform periodic manual assessments into continuous, comprehensive security validation.

Vulnerability Management

Vulnerability management (VM) represents perhaps the strongest consolidation opportunity in enterprise security, with fragmented incumbents like Rapid7, Qualys, and Tenable offering overlapping solutions that require extensive manual prioritization and remediation workflows. Traditional VM platforms excel at discovery, but fail catastrophically at intelligent prioritization—they generate thousands of alerts without the contextual reasoning needed to focus on what actually matters. Recent research shows that 57% of security teams dedicate 25-50% of their time to vulnerability management operations, with over half spending more than five hours weekly just consolidating and normalizing vulnerability data. AI employees can fundamentally transform this workflow by autonomously correlating vulnerability data across assets, business context, and threat intelligence to provide actionable remediation guidance.

Companies like Zafran are building continuous threat exposure management platforms that correlate vulnerability findings with an organization’s actual security controls—analyzing whether deployed defenses already mitigate identified threats—then leverage this contextual understanding as a foundation for agentic remediation. Now, Zafran is leveraging AI agents for autonomous vulnerability prioritization and remediation. These platforms shift vulnerability management from compliance-driven patching to intelligence-driven risk mitigation, consolidating multiple tools while reducing the manual work that dominates current enterprise programs.

Betting on David, Not Goliath

At Menlo Ventures, we’re excited about AI-native startups that bypass the architectural constraints holding back security incumbents. The convergence of exploding threat volumes, talent shortages, and AI capabilities creates a rare moment where startups can displace the incumbents built for human-scale operations. It takes exceptional ambition to challenge security incumbents trusted by enterprises for decades, and we’re excited to back founders with that vision.

* Backed by Menlo Ventures

Venky is a partner at Menlo Ventures focused on investments in both the consumer and enterprise sectors. He currently serves on the boards of Abnormal AI, Aisera, Appdome, Aurascape, BitSight, ConverzAI, MealPal, Obsidian, Sonrai Security, and Unravel Data. Prior to joining Menlo, he was a managing partner at Globespan Capital…

Rama is a partner at Menlo Ventures, focused on investments in cybersecurity, AI, and cloud infrastructure. He is passionate about partnering with founders to build the next generation of cybersecurity, infrastructure, and observability companies for the new AI stack. Rama joined Menlo after 15 years at Norwest Venture Partners, where…

As an investor at Menlo Ventures, Sam focuses on SaaS, AI/ML, and cloud infrastructure opportunities. She is passionate about supporting strong founders with a vision to transform an industry. Sam joined Menlo from the Boston Consulting Group, where she was a core member of the firm’s Principal Investors and Private…